Samyang / Rokinon / Vivitar / Bower 35 1.4 Lens Review : UPDATED

Lets get to something from my vintage vs new lens shoot out I did a few weeks ago – A review on the Samyang / Rokinon 35 1.4 Lens.

I’ve been using the Rokinon version of this lens for several months ( years now ). Its become one of my favorite lenses. Lets take a look why and go into the details of the various versions of this lens that are out there. I’m also NOT pulling punches on where this lens comes up short because there are some places that it does. These areas may or may not matter to you.

Samyang is officially the company that makes this lens, but its sold under several other names and companies including Rokinon, Vivitar and Bower.There are probably a few others out there as well.

There A Difference Between Vendor Brands Besides Price ?

YES ! besides price, there is one main difference. Its which direction the lens focuses in. If you shoot Nikon with its backwards ( to everyone else ) focus turn, then you’ll be ok with the Samyang OEM branded version of this lens. If you shoot Canon, cine glass and most other brands, then you’ll want the Rokinon version because it focuses in the normal direction. Bower may focus either way depending on the lot it seems and Vivitar focuses backwards.

The Good

Lets get right to the point, freaking awesome optical performance ! I’m thrilled with it. Even wide open its a great performer for the price. Ok, so a Canon L lens _will_ be a bit better, but 2X-3X the price better ? Thats up to you. If you make your living competing with 20 other shooters for the same shot, or get top dollar for your day rate and $ doesn’t matter, go ahead and just get the Canon. For everyone else who might prefer more value for their money, this lens is a no brainer. Its sharp and contrasty wide open. Yes there is some CA / diffusion, but its very minimal compared to a lot of older glass. You’ll instantly see how nice it is in comparison. This is pretty close to the L glass in overall performance without the big price.

Its Manual Focus

Yup ! no AF here to talk about. A nice 180 deg turn of the lens barrel. Smooth and silky with hard stops, this is real. Internal focus means the lens barrel stays at a constant length and the front doesn’t rotate. This lens works great with a short extension tube for closer focusing.

Focusing on this lens is a pleasure for its range and smoothness. A very big plus here. Another more expensive lens has only a 90ish degree focus turn.

Beyond Infinity

Ok this is weird but my lens focuses past infinity. Long lenses will do this because when they get cold, parts contract, you need the extra range to focus to actual infinity. However, I can’t think of any reason why this lens does so except poor factory calibration. Maybe its something due to the internal focus, but I doubt it. Seems like less than ideal QA. UPDATE I’ve done some poking around the web and it seems this is just how this lens is made. Its not a deal breaker, but if you spin the lens to the infinity hard stop expecting it to be sharp, it won’t be. Thats REALLY REALLY bad for people shooting stills in fast moving situations where you expect hitting the hard stop means you are at infinity. Instead you HAVE to look at the lens to set it there or use the LCD screen with magnify on to see critical focus.

Lens Markings

This probably isn’t something you’ll see written up, but since this is a very much a manual lens I need to bring it up. The focus markings are really skimpy. I have no idea why because it makes no sense. Look at this close up shot. Why can’t 5, 7 and 20 ft be marked ? its not like it would cost any money. It makes the well done depth of field markings next to useless for even modest work. Its this sort of stupid short coming that can make or break a product. If the optical performance of this lens was any less, this would be part of my reason to to NOT buy this lens. Seriously, DoF markings on a fully manual lens matter and cheating here is, well, leaving me speechless.

UPDATE

Later lenses from Rokinon seem to of heard this comment, they have improved their lens markings a bit like adding DoF markings.

I’ll also note, no IR focus mark. Ok, thats SERIOUSLY old school to talk about IR film,but some folks do mod dslr’s and remove the IR filter. The mark should be there. I’ll GUESS its around 2.8 to 5.6 on the RIGHT side of the focus mark. Really, it would of cost NOTHING to included it !

Something Is Shaking Inside

I’ve confirmed this on 2 copies of this lens. Shake the lens, something is wobbling around inside. WTF moment for sure. It seems like the floating focus elements have some play. I’m not talking about shaking this lens like a paint mixer, but just some easy up and down motion. The sort of motion you might get when shooting from a car, boat or plane. Could this wobbling mess with your image ? I don’t know because I haven’t tried, yet !

UPDATE

There was something wrong with the lens. I sent it to Rokinon and they “repaired” it by simply replacing it with a new one. THANKS ! that’s what good service should be and without any hassle. Also note I now have the cine version of the lens and will be doing a review of it shortly.

Plastic !

The lens front is plastic. Bummer. Not a deal breaker but disappointing. Upside ? filters will tend to not get stuck as often and will be easier to get out when over done. The lens itself is constructed of plastic externally, aluminum internally. This reduces the weight of the lens.

To be sure its a big handful of glass so a I’ve got no complaints. We’ve have plastic made lenses for years and they seem to hold up just fine. Plastic is also nicer to handle in cold conditions. I had no problems putting a focus gear on this. Also consider how much the big name lens makers also are making theirs out of plastic too….

Iris Ring

Its got click stops. For stills, this is ok. For Cine work, there is a DeClicked Cine version. All that really means is that a small single round steel ball isn’t installed into the iris ring control. Really thats it, no magic here to “declick” an iris ring. Why do they charge extra for leaving something out ? The Cine version is available as a Samyang branded lens.

Shallow enough depth of field ? wide open 60D @ ISO 160. With manual focus and DoF of about 2-3 inches, you better be on the money. Going to 2.8 might be your friend if you need a little margin for safety.

Optical Performance

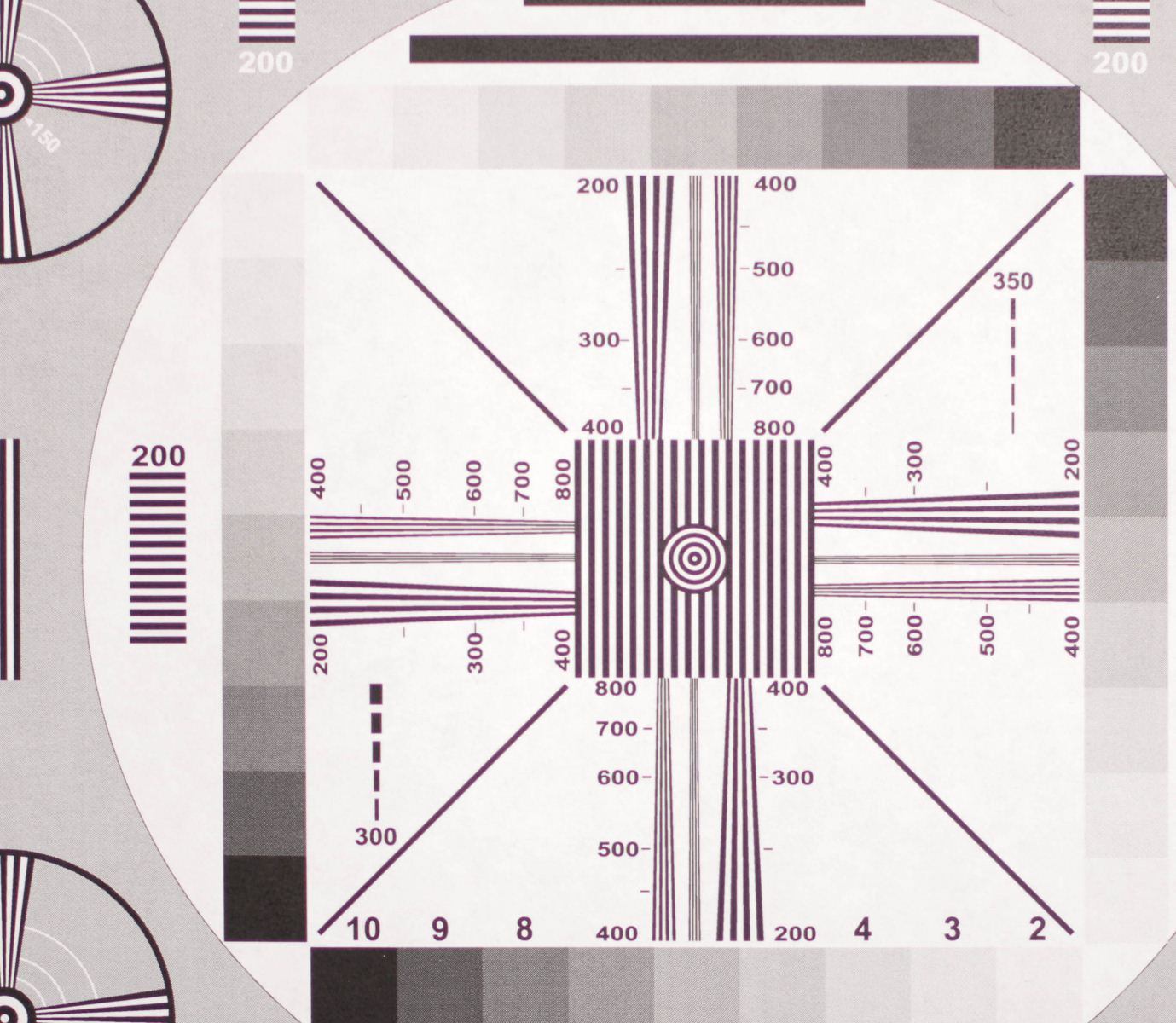

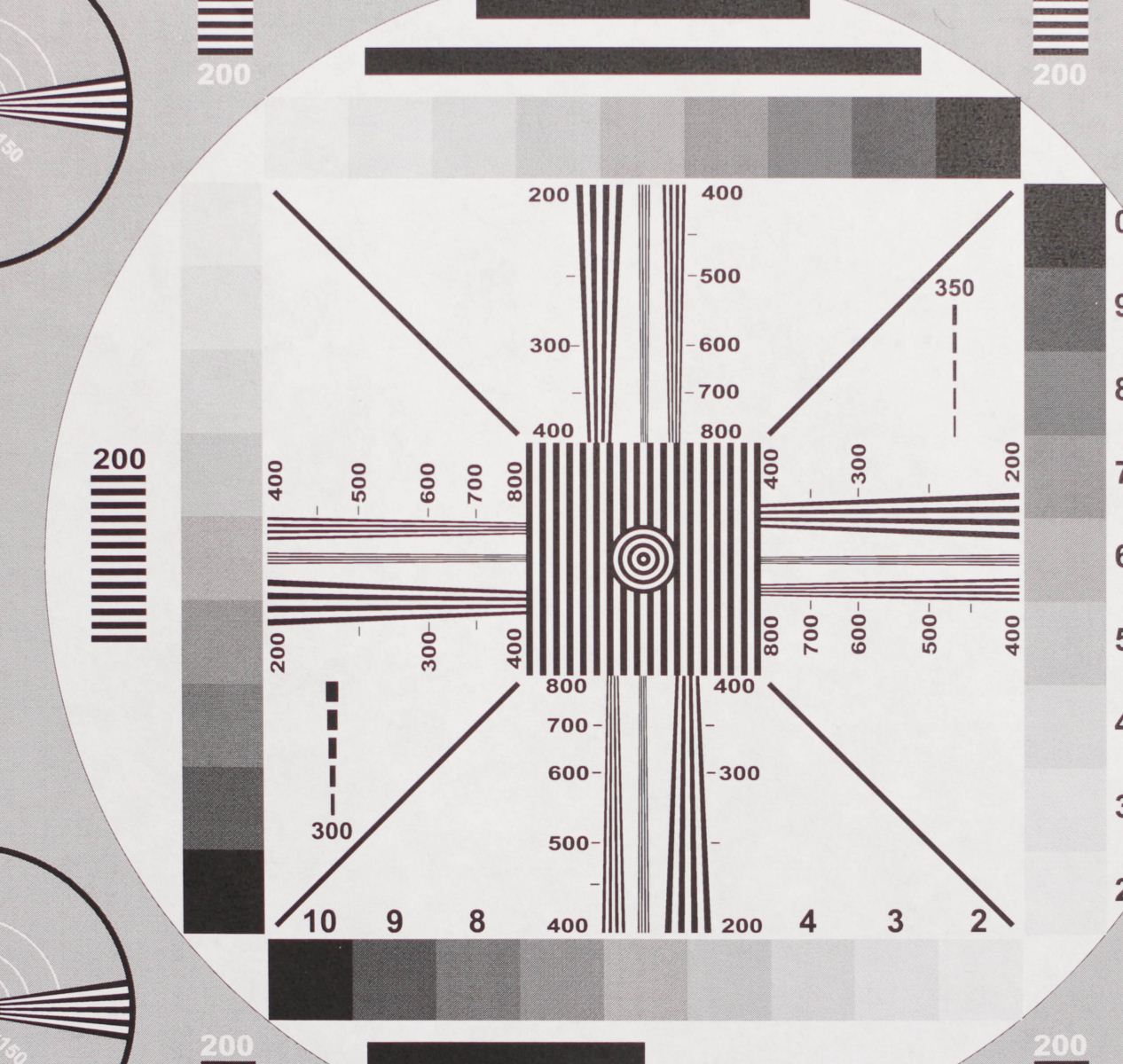

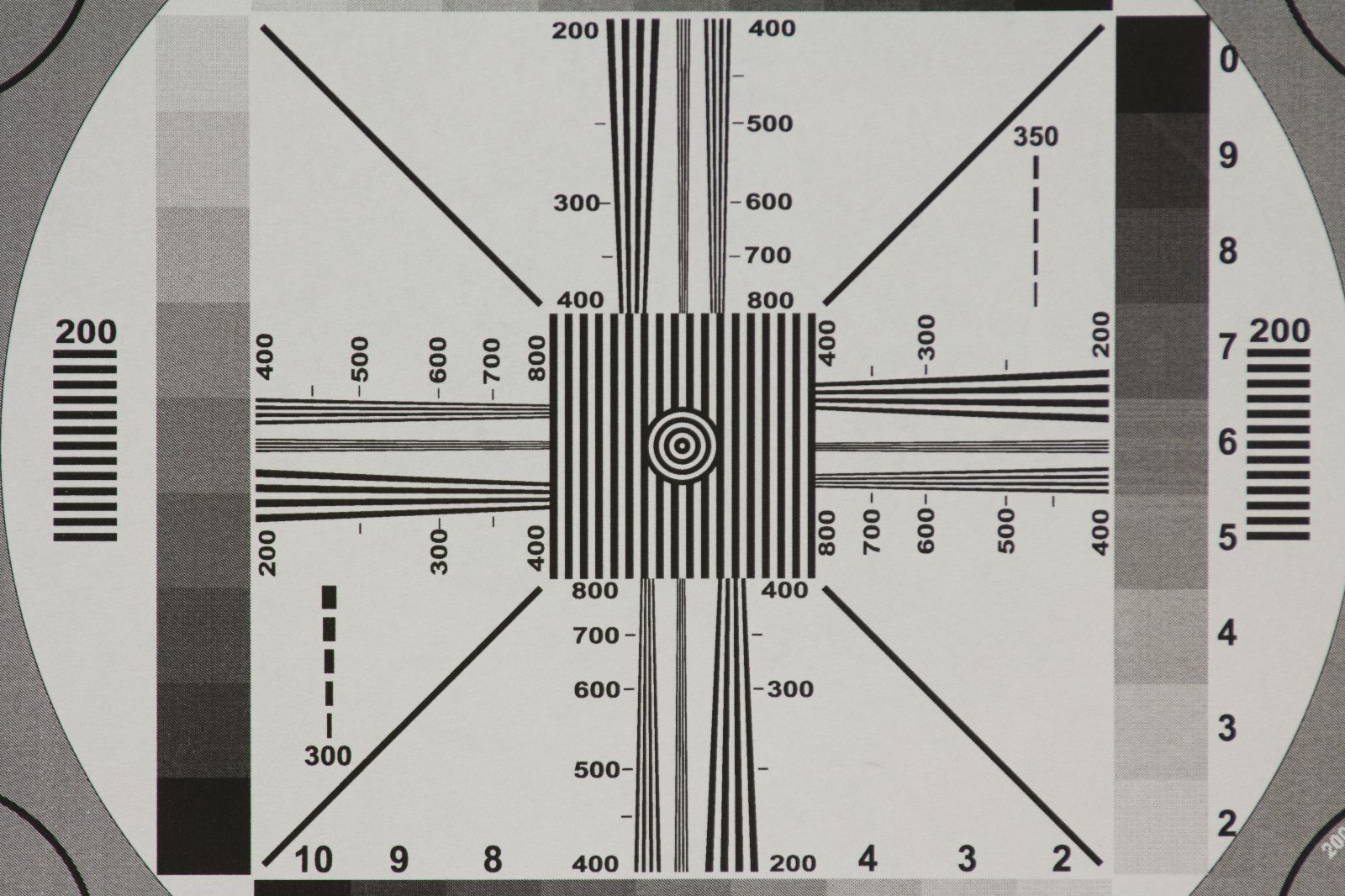

Its awesome for the price. Its Sharp where you want it, and fall off depth of field blur where you want it. At 1.4 focus carefully ! Your margin of error is about 1 inch. Ok, gory details now –

Wide Open 1.4 above. All sharp, some loss of contrast as to be expected. This is pretty minimal compared to a lot of other lenses out there and is great performance.

F5.6 What else is there to say ? Its SHARP and CONTRASTY !

BOKEH

Another odd point of this lens. Dead center Bokeh looks great as you’d expect. Going off center, the highlights gain this odd off shape expression I’d call cat eyes. This ONLY happens wide open or close to it. Stopping down a bit makes octogons. There is also the “wooly” effect at times in the highlights. Its some sort of interference pattern most likely cause because 2 lens elements are not aligned perfectly. Its nothing that has an adverse effect on normal image quality, but it does show up the way it does in my examples.

The wide open “cat eyes”. These are street lights far away. I’m focused to minimum distance making the effect most noticeable. Also note the ‘wooly’ effect.

Stop down and the cat eyes effect goes away making much more symmetrical highlights. This shot is full frame 1080 shrunk down.

Here is a larger section showing the wooly effect again in 1080. Double click for a larger full frame image.

Conclusion

Speaking of cat eyes, are you going to argue with this shot ? Yes this lens has that sort of personality that just grabs you. Its the look that defined the start of the dslr revolution with its ultra shallow DoF effects that got everyone excited. Its the lens that will change how you shoot if you have never worked wide open at 1.4 before.

This lens has some flaws to be sure. Would that stop me from buying it ? no. Its still a great deal compared to other 35 1.4’s. Some compromises are to be expected, but none here will stop you from making great images. Ok, maybe the focus past infinity thing could become a pain, but once you get used to it, its not the end of the world.

I’m shooting a lot with this lens these days including some quick portrait work. For video its a great lens that’s between being wide or long, yet if you go wide open has “the look” of longer glass. I’m thrilled with it despite its shortcomings which are not major, just annoying.