Yes and no. How is that for an answer ?

It depends. In this high contrast example, a flat shooting setting will get you the most amount of dynamic range to be recorded with in the limits of the codec. However, in an upcoming test, I’ll show you how shooting flat won’t be the best thing.

Canon EOS 60D Dynamic Range Contrast Adjustment Test from Steve Oakley on Vimeo.

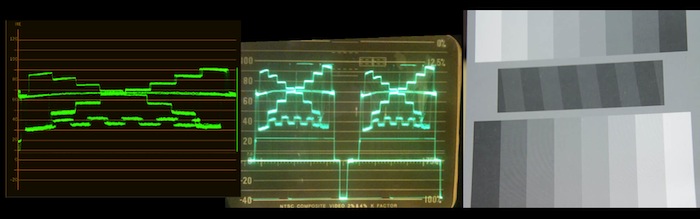

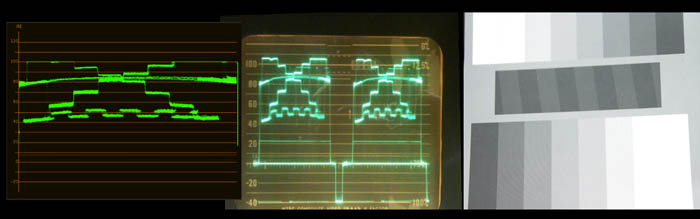

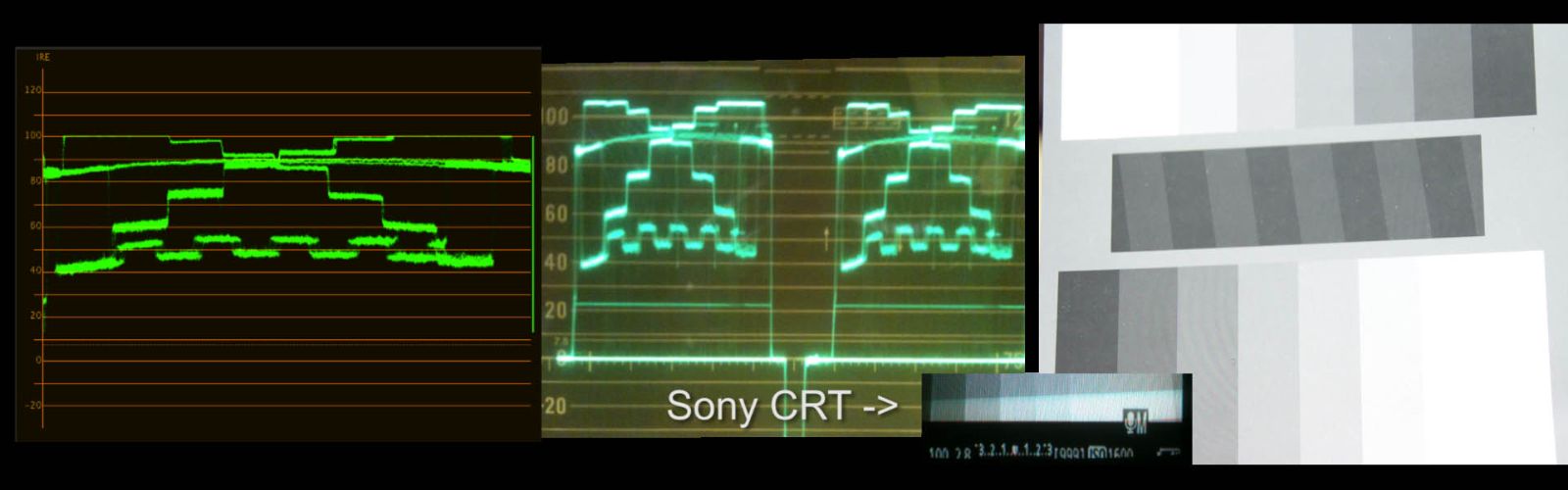

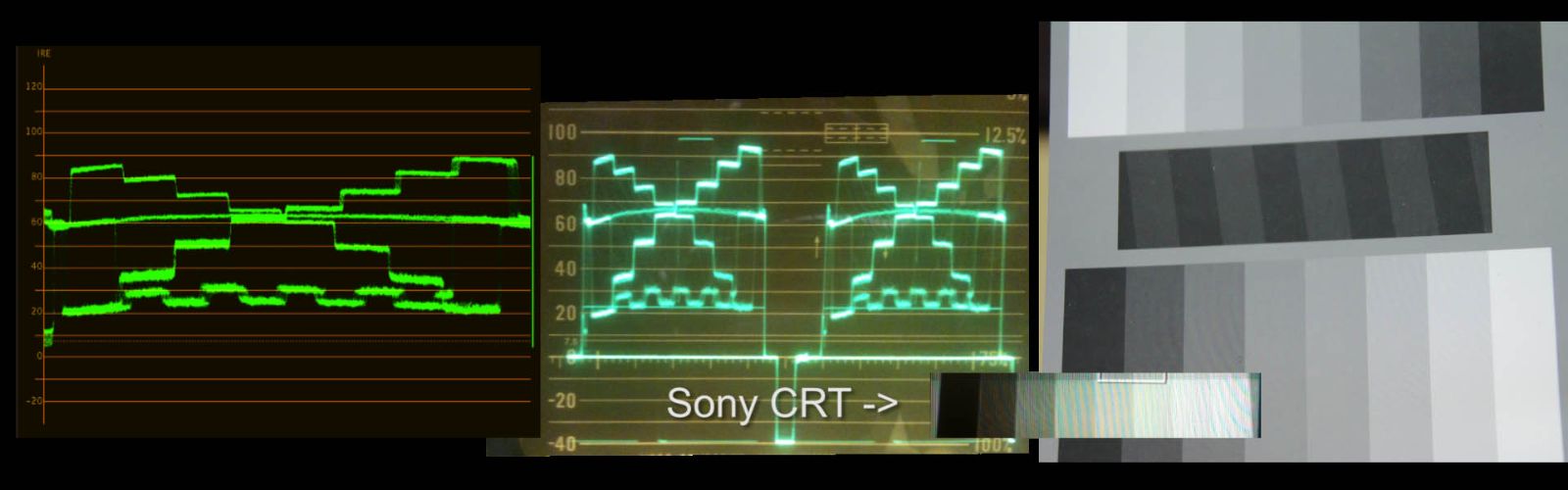

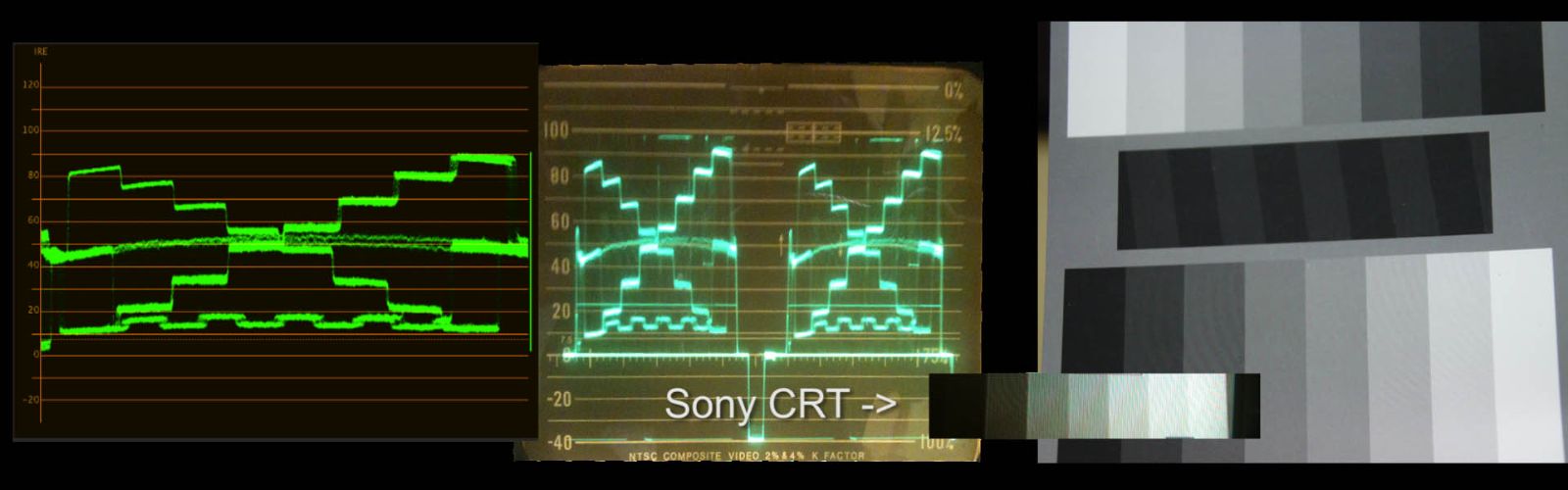

At first glance, lower contrast settings do indeed give you more image to work with. It really seems like the contrast adjustment is much more like a gamma plus pedestal adjustment. Adjusting contrast moves not just the low end of the picture but the mids around too.

My first couple of tests actually show a pretty large dynamic range, holding not only the sky and snow, but also well into the shadows. Technically, the image is underexposed since the whites aren’t quite white. However, I can live with that in seeing that the camera is really holding an enormous range of brightness. My hand held light meter bit the dust a few months ago, but after 20 years of service I’m not complaining. I don’t have a formal light range reading for you, but its easy to see this is at least 10 stops. Its easy to set up tests with charts and make certain claims, its quite another to make usable images, or see those specs in action where you can say you got information in a bright or dark area that you might not of with another camera.

For a finished shot it would be easy to grade this a bit and bring the snow up, and bring the sky down a bit.

Looking between the picture styles you can see differences in color rendition and dynamic range. While its probably not cool to say you use it, the standard setting actually holds up very well and better then some of the other picture styles.

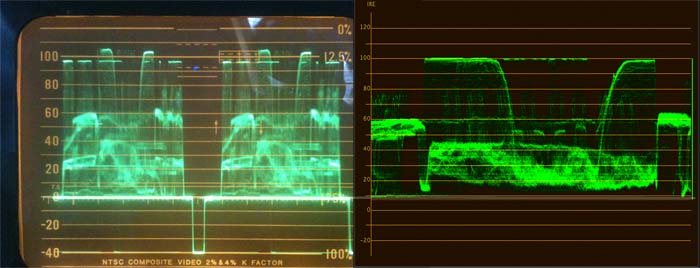

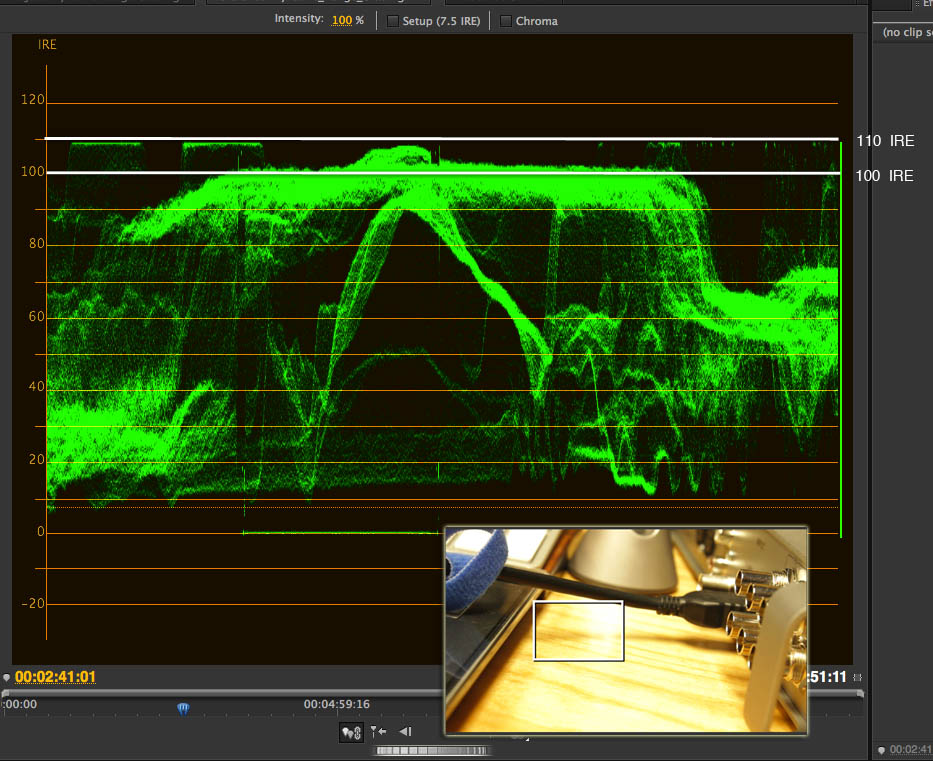

Clearly the contrast setting is not just changing the dark areas, its much more of a gamma type adjustment. If you scope the images you can see this. Another interesting thing I saw was that the EOS codec hard clips at 100 IRE. Now if 255 = 100 IRE, I’m somewhat ok with this. However, if 235= 100 IRE, I’m not. In 8 bit color space you need every last step of range you can get. Why throw away 20 more steps in gradation, especially in the high end ? My JVC HD100 lets you shoot over brights to 110 IRE, and thats where I have the camera set. When you have to grade compressed 8bit material, every little bit counts. On the positive side, the codec does allow pure 0 IRE level blacks, adding 13 steps on the bottom end.

Now on the side of shooting lower saturation, I’m going to go against what some other folks are running around saying. There is no net benefit to reducing color saturation, but there is plenty to loose when shooting. Quite simply, if you reduce your saturation, you simply are using fewer bits to record gradation with. So instead of getting 8 bits, your reducing yourself to 7 bits, or even 6 bits. Don’t believe me ? Shoot some shots with a lot of color gradation in them in various settings. Then go into your NLE of choice, open up your scopes, and color correct that material to look likes its got a full range of color again. Out will pop missing steps of color. Thats right, those empty sections are exactly that, areas of no gradation. You are jumping from one color value to another several units away with nothing in between. So unless you are shooting material with very high saturation to begin with, where you may be clipping values to begin with, stay put in middle setting, or perhaps -1 if you really must. Dialing color down below that won’t net you anything except loss of color information thats already been reduce by the h264 compression. Now just to irk those turn down the saturation folks, the very first project I shot with my 550D had its saturation turned up to +1. That project won and award an had everyone buzzing about how great the images looked.

All right, just as another comparison, here is a 7D vs Alexa comparison. It seems to show the same thing I experienced – that the canon cameras don’t like over exposure and clip highlights pretty fast.

Alexa vs 7d latitude tests from Nick Paton ACS on Vimeo.

So I’ll welcome your comments on this.